How to integrate Datadog with AWS ECS using AWS CDK

AWS Elastic Container Service is Amazon’s fully managed container orchestration service that allows you to easily deploy and scale containers. Something that you’ll want to implement sooner rather than later, is some fundamental observability to keep track of your containers and ensure that everything is running as expected.

Datadog is one of the leading providers for cloud observability and the insights that it can generate out of the box after ingesting your data is second to none. They are a global partner of AWS after all!

It’s been one of the providers I’ve used the most over the past couple of years, so have configured the agent for different compute types - one of them recently being with AWS ECS using CDK!

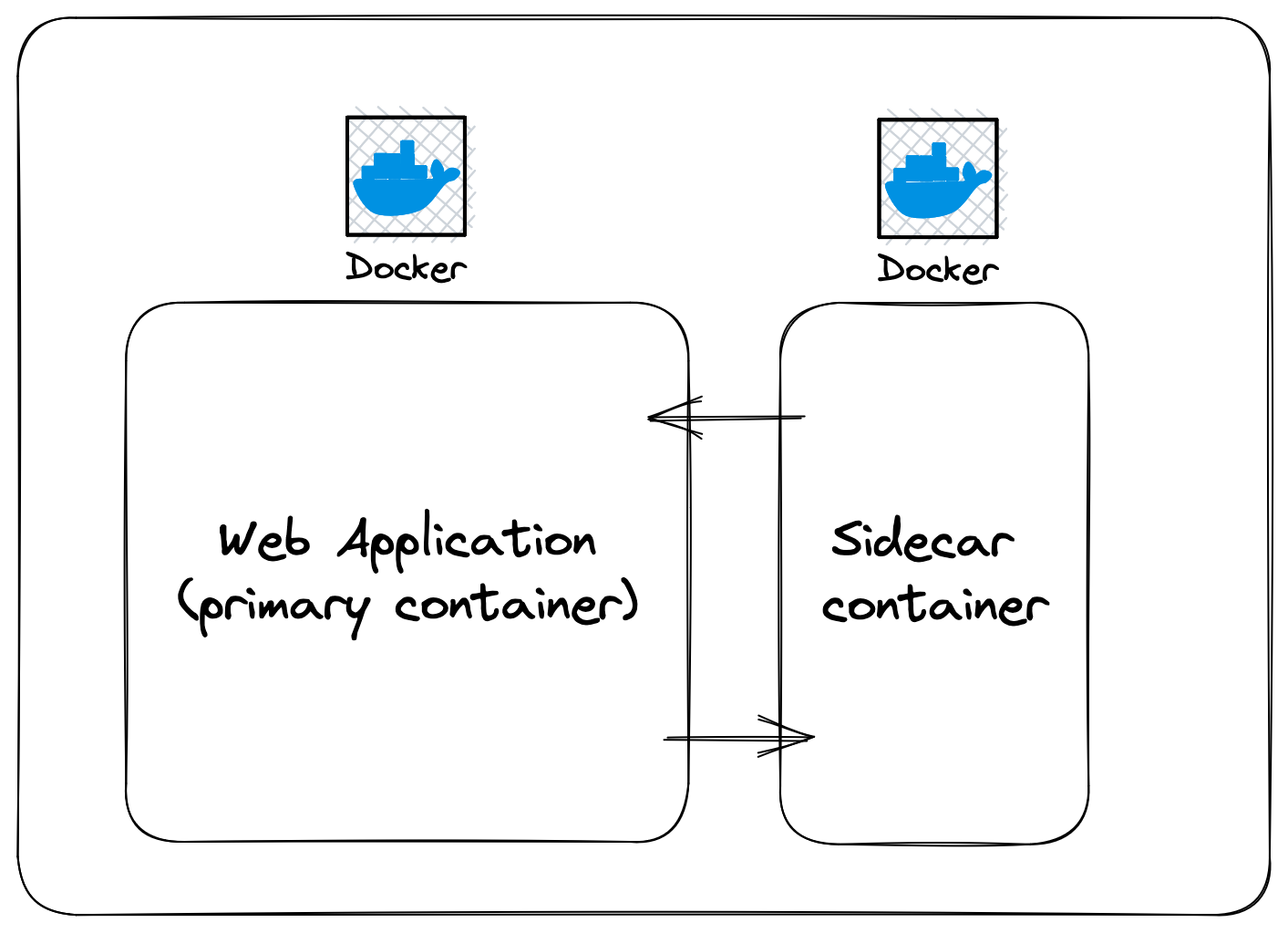

🏍 Sidecar pattern

When we’re talking about containerised applications, we’re usually building business logic as part of a service that is nicely wrapped up and portable. The beauty of containers is that they are their own little isolated sandbox and each one can execute in their own desired runtime, for example, I might have a web service running in Node.js and a backround worker running in Python. This is great for picking the correct tools for your use case and allowing them to be developed in isolation, but for certain centralised things like logging, you don’t necessarily have to want to re-write the logging client in various runtimes - this is where sidecars come in.

A sidecar is a separate container that is attached to your primary container in order to provide additional functionality.

✍️ Configuring CDK

If you have used AWS ECS before, you’ll be familiar with the terminology around clusters, service, task definitions and containers. Fargate is usually my go-to choice for underlying compute as well, as it means we don’t have to worry about provisioning the instances under the hood!

Adding a sidecar to your primary container definition is really easy with AWS CDK, you just need to attach it to your task definition providing the container image and any other metadata required.

Note: the following code snippets assume that you have also provisioned the cluster, service, and other resources required for ECS

Firstly, you’ll define your task definition:

import * as ecs from "aws-cdk-lib/aws_ecs";

import * as iam from "aws-cdk-lib/aws_iam";

const cpu = 256

const memory = 512

// Consume role from a separate ARN or provision a new one

const taskRole = iam.Role.fromRoleArn(this, "FargateTaskDefinitionRole", "<<arn>>");

const ecsFargateTaskDefinition =

new ecs.FargateTaskDefinition(

this,

"FargateTaskDefinition",

{

cpu: cpu,

memoryLimitMiB: memory,

taskRole: taskRole,

}

);

This provisions a fairly lightweight task definition with 256MB CPU and 512MB memory with a task role that we’ve defined somewhere else.

Next, we can add our sidecar container. Datadog have their own agent published as an image to cater for this use case, it’s also pushed to the public ECR repository, so we can point our container to that image.

import * as ecs from "aws-cdk-lib/aws_ecs";

// Assume we have also attached our primary container with the same syntax as below - but pointing to our application image

const datadogContainer = ecsFargateTaskDefinition.addContainer(

"DatadogAgentSidecarContainer",

{

image: ecs.ContainerImage.fromRegistry(

"public.ecr.aws/datadog/agent:latest"

),

environment: {

ECS_FARGATE: "true",

DD_API_KEY: process.env.DD_API_KEY,

DD_APM_ENABLED: "true",

},

}

);

datadogContainer.addPortMappings({

containerPort: 8126,

protocol: ecs.Protocol.TCP,

});

You’ll notice that as well as configuring the image to point to the public ECR repository for the image, we’re also specifying some environment variables for the container - these are probably more specific depending on your set, so you can check out the docs here

Note: you can also push your Datadog API key into the likes of Secrets Manager and dynamically pull it from there, rather than just a local environment variable

Finally, we’re adding the specific port mappings that the agent runs on and the protocol that it uses for communication. Make sure to check your security group configuration too, might require updated to handle this too.

And that’s it! Well, pretty much. If you use custom log drivers within your other applications, you might want to specify them here as well within the container definition for the Datadog agent - but again, that’s use case specific.

Run your deploy and you should have a Datadog agent sidecar container running along side your application in the same task definition!

💰 But wait, does it cost more?

I now have two containers running instead of just my single application - does this double the cost?

In terms of AWS ECS, no. Whenever you run a sidecar container, it is attached to your task definition and since your memory and CPU allocation is defined at the task definition level, it is bound by these constraints. The sidecar uses the same compute resources as your primary container.

Now in theory, if you attached a processing-intensive sidecar, you might see some side-effects and containers cycling due to running out of resources - but you’ve implemented Datadog for monitoring, so you’ll know that before it happens, right? It’s all came full circle 😄

Conclusion

- Within container land, sidecars are modular, separate containers that get attached to your primary in order to provide additional functionality.

- Simply attach the Datadog agent container definition to your existing (or new!) ECS task definitions.

- Happy monitoring and dashboardin'.